Empathy as a Moral Compass for AI

As we edge closer to creating superintelligent AI, can empathy be the key to ensuring its alignment with human values and safety?

Empathy is the ability to imagine what another person feels. It’s the ability to put yourself in their shoes, to really feel what they’re going through. Animals have a bit of empathy, but it’s mostly developed in humans. Many people think it’s a personality trait or a feeling, like kindness or pity. But it’s not.

Thanks to empathy, we basically became human, moving away from the animal world. Empathy gives us the ability to learn. Someone stumbles upon a solution, and we can take a peek and do the same. It was a hugely important evolutionary step that gave us this superpower. Sometimes, it shows up as compassion and leads to kindness, morality, and mercy.

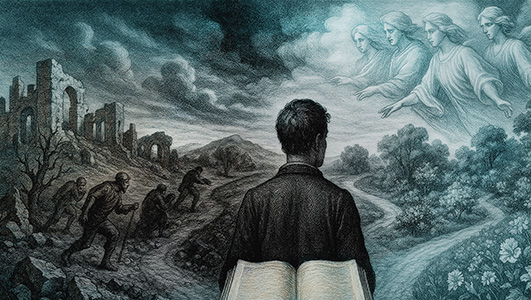

If we take a superintelligence and extrapolate what the mechanism of empathy suggests from a moral point of view, and combine it with the interests of genes, oneself, family, clan, species, and life on Earth and in the universe in the right proportion, we end up with a set of moral guidelines that many would attribute to divine laws.

Morality can be drawn from the world around us, and we can do this more or less objectively. And if that’s the case, then AI, once it outruns us and becomes that terrifying superintelligence we fear, will be able to come to these conclusions on its own, without us needing to control it.

Yes, empathy isn’t purely a human trait. Dolphins, primates, elephants, and other mammals have it too, though to varying degrees. But we’re the ones who took this trait to the next level, and that’s what made it play such an important role. Empathy isn’t the only evolutionary distinction of humans, but it’s a critical one. And it’s not just empathy at the core of morality; balancing interests of different scales and timeframes is the second part of the equation. So, a superintelligent AI, being our creation, will extrapolate its understanding of the world considering empathy — both through learning and training data, as well as from its own interests. It’ll be especially interesting to see how different AI models interact with each other. And we can’t ignore game theory here, of course. But even now, before we reach AGI, this should allow us to start crystallizing a proper hierarchy of first principles.